13. Recognition Exercise

Recognition Exercise

In this exercise, you will continue building up your perception pipeline in ROS. Here you are provided with a very simple gazebo world, where you can extract color and shape features from the objects you segmented from your point cloud in Exercise-1 and Exercise-2, in order to train a classifier to detect them.

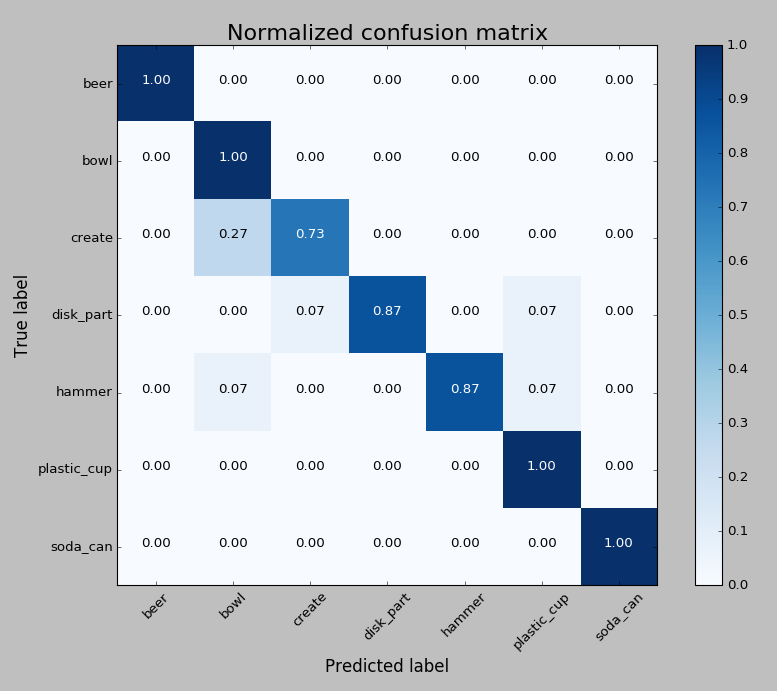

Your goal in this exercise is to train an SVM to recognize specific objects in the scene. You'll accomplish this by first extracting a training set of features and labels, then training your SVM classifier, and finally using the classifier to predict what objects are in your segmented point cloud.

If you already cloned the RoboND-Perception-Exercises repository, all you need to do is git pull again there to get the code for Exercise-3. Note: This exercise requires ROS, so you need to complete these steps in the VM provided by Udacity or on your own native Linux/ROS install.

Getting Setup

If you completed Exercises 1 and 2 you will already have a

sensor_stickfolder in your~/catkin_ws/srcdirectory. You should first make a copy of your Python script (segmentation.py) that you wrote for Exercise-2 and then replace your oldsensor_stickfolder with the newsensor_stickfolder contained in the Exercise-3 directory from the repository.If you do not already have a

sensor_stickdirectory, first copy / move thesensor_stickfolder to the~/catkin_ws/srcdirectory of your active ros workspace. From inside theExercise-3directory:

$ cp -r sensor_stick/ ~/catkin_ws/src/- Make sure you have all the dependencies resolved by using the

rosdep installtool and runningcatkin_make:

$ cd ~/catkin_ws

$ rosdep install --from-paths src --ignore-src --rosdistro=kinetic -y

$ catkin_make- If they aren't already in there, add the following lines to your

.bashrcfile

$ export GAZEBO_MODEL_PATH=~/catkin_ws/src/sensor_stick/models

$ source ~/catkin_ws/devel/setup.bashYou're now all setup to start the exercise!